4. Analysis and Evaluation Results

This chapter is about the final steps of an evaluation: analysing data and dealing with evaluation results. Some of the information relates specifically to the analysis of formal evaluations. However, the tips on dealing with (negative) evaluation results in the second section are also helpful for drawing appropriate conclusions from informal evaluation results.

4.1 How do I analyse evaluation data?

You will often have to start by preparing the data you have collected in such a way that it can be analysed (e.g. by transcribing recordings (by automated means), anonymising/pseudonymising personal data, systematising and sorting verbal data, and sorting and cleaning up numerical data). There are various programmes available for analysing data (e.g. SPSS or MAXQDA). Depending on the scope of your data, however, you will often be able to simply transfer the data clearly to tables or text files for analysis.

“There is always a considerable challenge involved in analysing data that has been collected using different methods and tools”. (Arnold et al. 2018: 409).

This is because we usually attempt to reconstruct the context. This means that we try to identify factors, problems or processes and illustrate contexts to derive measures for positive (further) development. Accordingly, we want to examine these contexts to identify prerequisites, conditions and causes for the results. Based on this, we then attempt to draw conclusions and recommendations (ibid.).

In order to reconstruct these contexts, it makes sense to conduct the analysis with other VE project participants and to interpret the results together. The perspective of your project partners (the other lecturers/teachers) is particularly important here, as the statements or actions included in the data will have been made by students from different (cultural, institutional, etc.) contexts and should therefore be interpreted with those contexts in mind. You should always ask yourself: How could the results be interpreted differently?

The next step is to document your results and interpretations. Identify problems and positive aspects (including the data collection itself), present your key results transparently and make connections to the VE project (Arnold et al. 2018: 411).

You can then draw conclusions by articulating suggestions and improvements, which you should ideally present to everyone involved for discussion to determine a plan of action together. It also makes sense here to think about how you could improve the evaluation (e.g. by discussing deficiencies). You should also consider whether and in what form you are willing and able to publish the results (in your educational institutions, as practical or academic publications, etc.). You can often help others by sharing your experience and knowledge, allowing you to get the most out of the effort you may have put into evaluating your VE project.

4.2 How do I deal with my evaluation results?

You will find that many evaluation results elicit an obvious response, as they relate to specific problems or challenges that you have identified. For example, you may decide to simply replace any tools that are proving difficult to use or causing technical difficulties. If any tasks have frequently been misunderstood by students (perhaps because their wording is too complicated), they can be edited. Other results may not allow for any responses or measures whatsoever due to certain circumstances that cannot be changed (e.g. if the project length has been criticised as being too short but the semester times of the groups involved only overlap for a certain number of weeks).

Many results can only be put into context when compared with other evaluations and results. In order to draw comparisons, you could, for example, develop evaluations together with other colleagues who carry out VE projects and then discuss your results. And repeatedly conducting evaluations in your own VE projects can also provide a solid basis for reflecting on your own development and improving your VE projects.

12. Activity:

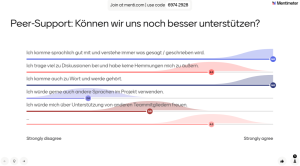

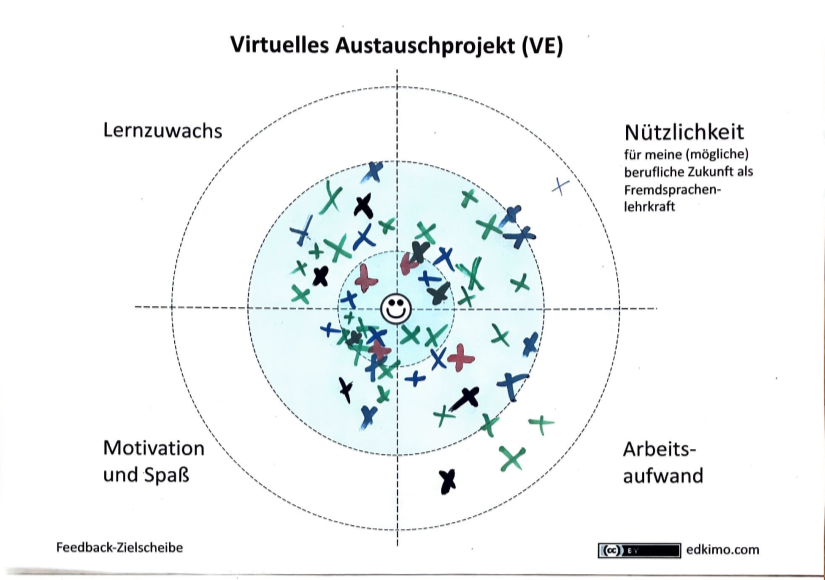

Below you will find examples of evaluation results from (fictitious) courses that have not performed well in certain areas. First of all, look at the example results and think about which of the didactic dimensions described in Chapter 3.3 (ProLehre n.d.) these results refer to. What might have led to these results and what could be done to improve them? Then read the suggestions for improvement “behind” each evaluation result (by moving the slider from left to right). You will find an overview of specific courses of action in each case.

As a reminder, here are the didactic dimensions mentioned above (ibid.): course design and structure, teaching, use of media, teacher engagement and learning atmosphere, student requirements/level of difficulty, acquisition of skills.

Please note: As the evaluation results come from different institutions, some positive responses are on the right while some are on the left. You should therefore take a close look at each scale.

translated by: KERN AG, Sprachendienste Leipzig

This work © 2024 by Prof Dr Almut Ketzer-Nöltge is licensed under CC BY-NC-ND 4.0